Micropolis BBS FAQ

What is Dial-up

Historically, users would "call Boards", that meant using facilities of the public switched telephone network (PSTN) to establish a connection to a remote computer system running a bulletin board software. This was "calling a BBS via dial-up line". And the available lines of a BBS were usually called "nodes". Such a link is a one-to-one or point-to-point channel where communication is secured via the privacy guaranteed by the PSTN infrastructure and by laws such as the US American Convention on Human Rights Article 11's "Right to Privacy" or European legislation like the "Fernmeldegeheimnis". Users would use devices called a "modem" to modulate digital data "sentences" as audio signals which a normal PSTN line could transmit - as in any other human conversation via phone. Early computer networking wasn't "always on". People would call specific computer systems for a certain service. USENET was a dial-up service in its first incarnation. CompuServe in the US, BTX in Germany, Minitel in France all were dial-up. Bulletin Board Systems were dial-up. Some BBS offered gateways into larger networks, or offered exchange with messaging networks like FidoNet, FsxNet, RelayNet, WWIVnet, the USENET or the World Wide Web (WWW) commonly called "Internet" today. But all of this was dial-up and meant that a user was taxed a fee for each minute the computer was on-line.What is a Modem

A "Modem", short for "modulator-demodulator", is a computer hardware device for the conversion of data from a digital format into a format suitable for an analog transmission, usually with a BBS or Internet access this means transmission via a (PSTN) telephone line. This functionality is most obvious in early modems that were built as an "acoustic coupler", a device resembling a telephone handset, where a normal telephone handset could be coupled in - like matching two handsets reversed, mirrored, so that each microphone was listening to the speaker, and each speaker talked into the microphone of the other handset. Only that with an acoustic coupler, a computer produced noises for transmission through the phone line that another computer on the other end could pick up, decipher and re-translate into digital information on the other end. A modem transmits data by producing special noises, a process that is actually the modulation of one or more carrier wave signals to encode digital information. A receiving modem "hears" the noises and demodulates the signal to recreate the original digital information. Modem users and dial-up enthusiasts are sometimes called "Modemers". Fax machines are also using modems internally and produce the same familiar noises upon connect.In hobbyist or computer amateur contexts of the 1970s, 1980s and 1990s, digital data channels between computer systems were usually established over ordinary switched telephone lines that were not designed for data use. Audio quality was low and the signal to noise distance less than perfect. That meant that digital data, the modulated data, must work within these constraints, take the limited frequency spectrum of a normal voice audio signal into account and compensate for line defects. Early modems, including acoustically coupled modems, relied on the user (or on an additional device, an automatic calling unit) to actually dial and establish a voice connection before putting the handset into the acoustic coupler or switching a modem to line. Of course, this quickly improved and more modern modems were are able to perform the tedious actions needed to connect a call through a telephone exchange on their own. Also the "acoustic coupler" form vanished and was replaced by modems directly accepting the telephone connector plug (RJ11, "registered jack" or 4P4C) as the "speaker to microphone"-configuration was a source of more noise interference for a connection anyway. Modems since then were built in a form-factor of a VHS cassette and were able to pick up the line, to dial, could understand signals sent back by phone company equipment (dialtone, ringing, busy signal), could recognizing incoming ring signals and answer calls if configured to do so.

One milestone in modem technology was the Hayes Smartmodem, introduced in 1981. The Smartmodem was a 300 baud direct-connect modem, but its innovation was a command language which allowed the computer to make control requests, such as commands to dial or answer calls. A modem was connected to a computer via the RS-232 serial interface, either via a wide DB-25 connector, the smaller DE-9 D-Sub connector or smaller custom 9-pin connectors. The Hayes commands were then sent over the same connection as the data itself, made possible by two operating modes ("data" and "command mode") which could be switched by special escaped sequences and pauses in the serial data stream. The Hayes commands used by this device became a de-facto standard, the "Hayes command set", which was integrated into devices by many other manufacturers and, to this date, many incarnations of modems - even GSM cellular modems - use the Hayes command set.

Over the years, higher modem speeds became available, growing from 300 baud over 2.400, 9600 baud and 14.400 baud to many kilobaud. This came to an end when modems were able to exhaust the theoretically available bandwidth of a phone line at about 56 kbit/s. The used modulation schemes at that point were so sophisticated, that some schemes, despite standardization, were incompatible between manufactures and BBS SysOps often advertised which modem, model or make they were operating on which BBS node.

What is ISDN?

During the 1990s, an evolution of traditional PSTN phone lines reached end users in the form of ISDN, or Integrated Services Digital Network. The public phone system became increasingly digital and ISDN was the product that ambitious customers could lease in regions where it became available. The ISDN system offers two 64 kbit/s "bearer" lines and a single 16 kbit/s "delta" channel for commands and data. With dedicated ISDN cards, users could replace their modems and eliminate the "audio translation element" by directly switching to a data channel natively designed for digital transmission. An ISDN line could max out the available phone bandwidth while it maintained a more reliable and compatible connection than via modem. Each ISDN endpoint provided two voice/data channels of 64 kbit/s (or 56 kbit/s in some countries) that could be bundled for double speed and one always-on data channel for call signaling and/or basic always-on Internet access (if providers allowed). But as these improvements didn't matter much for an average consumer, ISDN saw relatively little uptake in the wider market and ISDN data transmission was quickly replaced by DSL or its most widely rolled out ADSL technology (asymmetric digital subscriber line). ADSL is what runs an always-on broadband Internet connection today, although it's quite surprising that hundred thousands of users worldwide still use dial-up and a modem to connect to the Internet today.Probably one of the most exhaustive guides on modems, their use and technical details, was written by David S. Lawyer and can be found, for example, here. More links to material about modems, BBS and all can be found at the end of this document in "Further reading and material elsewhere".

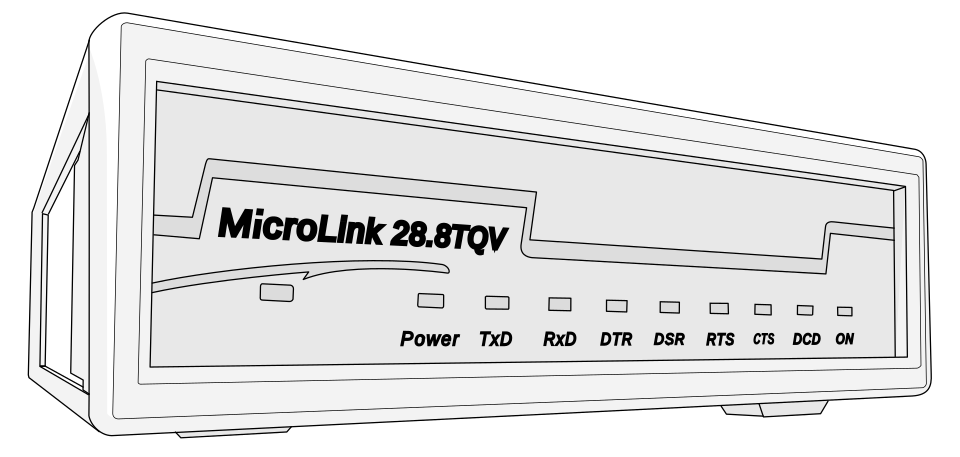

What are the meanings of the abbreviations and lights on the front-panel of my modem?

On the front panel of an analog modem, there are usually a number of LED lights, labeled with abbreviations that indicate various device conditions. Here is a listing. As a rule of thumb, the more lights light up, the more complete a connection is. For the actual meaning, please refer to the section "Connecting your modem" where some basics of RS-232 are outlined.TxD - Data is being sent activity (sometimes just "TX") RxD - Data is being received (sometimes just "RX") DTR - Data Terminal Ready DSR - Data Signal Ready RTS - Ready to Send CTS - Clear to Send DCD - Data Carrier Ready OH - Off-Hook, the handset is off the hookswitch, well, with a modem not having a handset, it means the modem "picked up" or is "on line"Old Hayes modems also used these abbreviations:

HS - High-speed (4,800 and above) AA - Auto-answer CD - Carrier detect RD - Receive data (same as Rx) SD - Send data (same as Tx) TR - Terminal ready (same as CTS?) MR - Modem ready (same as DTR?) VO - Voice RI - Ring Indicator

How can I dial into a BBS in 2023?

There's a renaissance of Bulletin Board Systems going on worldwide and retro computer enthusiasts old and young are using different means to connect to BBS. Many of these new old-skool "Boards" are reachable via Telnet and some even via dial-up phone line.via in-browser Telnet

Connecting to a BBS via Telnet is probably the easiest way to explore the hidden realms of Bulletin Board Systems. You can opt for an in-browser Telnet emulator, use a terminal emulator on the command line or fire up an all-in-one communicator package with built-in dialer, phonebook and terminal emulation.via Modem

Traditionally, you would connect to a BBS via the public phone system, using a modem. This document here has pointers on how to connect a modem to your computer, how terminals work and how you can get them working nowadays. And you can find links to directories of active BBS that still offer dial-up nodes. One thing to point out it that the transition to an all-digital IP-based telephone infrastructure (Voice over IP, VOIP) may interfere with how modems work and modems may fail in setting up a connection. Read the section "Using an analog modem over a Voice-over-IP line" for additional help with that.via Modem-Emulation (which is actually TCP/IP Telnet)

Many retro home computers, like the C64, have all it needs to control a modem and dial into BBS. But users may not want to actually block a land-line or they might want to connect to a BBS that doesn't offer dial-up nodes. On the other hand, many retro computers have no means of talking Telnet over TCP/IP. For this scenario there's a nifty adapter called "WiFi232" or "WiModem232". It connects to your computer and emulates a Hayes compatible modem for your system. Your computer is then able to talk to this modem serially, like it was a real modem, only the AT command to dial a number is slightly different. Instead of a phone number, you specify an IP address or domain name plus optional Telnet port. The modem emulator then initiates a TCP/IP connection to this system. The emulator itself is logged into your wireless LAN WiFi network and has full Internet access. It works like a Modem-Telnet bridge for your older computer system.What is Baud

Baud (abbreviated as "Bd", named after Emile Baudot) is the unit used to measure the symbol rate of a transmission over a data channel. It describes the frequency changes of a signal (or "status changes", "transitions", or "steps") on a transmission channel per second. 1 Baud equals one step per second. It is not the same as bits per second ("bits/s" or "bps") but is very often used interchangeably. Baud means how many symbols per second are transmitted, where each symbol is one step. Each symbol now, depending on the modulation scheme in use, usually encodes four, eight or more bits in each signal change. That's why the bps rate of a transmission is usually higher than the Baud rate. V.32 uses a step rate of 2400 Baud and transmits at a rate of 9600 bps. A different scheme sends 6 bits per symbol at 2400 Baud for 14400 bps.The fact that a modem's speed is usually given in bps isn't only due to marketing exploiting the higher number to advertise a product, but has the same historic reason why people tend to use Baud and bps interchangeably. Early modems encoded one bit in one phase change, thus, bps and Baud number would be the same and were both used to describe the effective bit rate, or "speed", of a modem. When new modem communication standards arrived, the effective bit rate started to exceed the Baud rate and confusion entailed.

One more detail worth noting is, that there's usually a difference in data rate between modems (modem-to-modem) and rates used on the serial connection cable between your computer and your modem (computer-to-modem). The first, the "DCE" rate is the speed a modem uses for communications over a common telephone line and is usually given in bps. The abbreviation "DCE" is short for "Data Communications Equipment", which means "your modem". The other abbreviation, "DTE", is short for "Data Terminal Equipment" and is used to indicate your computer (or terminal), the device your modem is connected to. It is also given in bps and on these local serial lines Baud rate and Symbol rate are usually the same. Knowing about this difference, DTE and DCE, you should actually speak of DCE Baud rate of a modem when the rate between modems is meant.

Probably one of the most exhaustive guides on modems, their use and technical details was written by David S. Lawyer and can be found, for example, here.

What is a Terminal?

A computer terminal is an electronic (or even electromechanical device) that is used for entering data into or transcribing data coming from a computer system. Most common are character-oriented terminals, where the terminal displays entered ("echoes") or received characters on screen (historically on paper). A user either writes text strings on a prompt line or the system outputs lines of text, one character at a time. Usually 80 characters per line - as this is how many chars would fit in one line on a piece of office paper. Ultimately a line is completed with a "carriage return" and "line feed". This way the output or displayed text is scrolled, so that only the last several lines (typically 24) are visible (80x25 characters). It depends on the terminal implementation (or today on its mode of operation) if a text string will be buffered until the ENTER key is pressed, so the application receives a ready string of text, or if each character is transmitted right after input.One of the early electronic terminals, the DEC VT100, was one of the first terminals to support special "escape sequences" or "escape codes" for cursor control, text line manipulation and manipulation of the keyboard status, lights etc. This scheme for "escape codes" started the de-facto standard for hardware video terminals and later terminal emulators that led to the "ANSI X3.64" standard for terminals. In MS DOS these escape codes are usable through the ANSI.SYS driver which helps emulate an "ANSI terminal" and further contributed to the widespread use of the term "ANSI" for an "extended ASCII" character set with optional "escape sequences" to control the cursor or color specific parts of the output.

Note that a terminal or terminal emulator is not Telnet. A terminal receives, processes and displays serial data according to the ANSI terminal specs. It won't understand or process Telnet's "interpret as command" sequences or the various other Telnet specific terminal extensions. But terminal emulators usually can run telnet as a layer between a remote system and their display, running telnet "in fullscreen mode", where telnet then feeds "serial ANSI data" to the actual terminal display.

As with many de-facto standards, the problem here is that there are many different terminals and terminal emulators, each with its own set of escape sequences or capabilities. Special libraries (such as curses) have been created, together with terminal description databases, such as Termcap and Terminfo, to help unify "talking" to different terminals - but to this date, especially with color, the actual displayed output of a specific escape sequence can't be really predicted and even depends on user settings or activated desktop themes. Referring to the example of text coloring, there is no strict definition of a specific color. Although the "ANSI standard" defines basic colors by name, systems and users may define which shade of red, blue, green, etc. the application actually outputs. Further, the ANSI escape codes for "bold" text display are commonly used to add 8 more shades to the basic 8 colors intended, but as this as well is just a suggestion, it can't be expected that "bold red" actually outputs "bright red" and not just "a red character in bold" - or some completely unrelated shade of color. The aixterm specification tried to overcome at least this ambiguous coloring but is an equally unbinding suggestion.

Later terminals started offering the ability to alter colors by accepting longer escape sequences, with more numerical values indicating more shades of colors, but implementation differences here again resulted in not 100% predictable color renditions. Today's terminal emulators, like Xterm, Ubuntu's terminal, GNOME Terminal, KDE's Konsole, iTerm and libvte based terminals allow "true color" 24-bit colors with a triplet RGB escape sequence resembling rgb or hex color in HTML.

One historical note is that electromechanical devices called a "teleprinter" (or "teletypewriter", "teletype" or "TTY") were used for telegraphy and later more generic typed text transmission. Developed in the late 1830s and 1840s they saw more widespread use in the late 1880s. Such machines were decades later adapted to provide a user interface to early mainframe computers and minicomputers, sending typed data to the computer and printing the response. Today's computer systems still use the term "TTY" in reminiscence of these machines, calling "virtual console" or "virtual terminal", the user interface of a computer system, a "tty".

One anecdote in relation to early terminals is that many computer hobbyists converted an IBM Selectric electric typewriter into a computer terminal, basically by adding a RS-232 serial interface to it. This was possible as the Selectric had a unique printing mechanism featuring a ball with 44 character stamps on it. Using a clever mechanism called a "whiffletree" digital input was converted to analog ball steering motions (effectively a simple Digital to Analog converter, DAC). Converting an IBM Selectric was a relatively inexpensive and fairly popular way of getting a printing computer terminal during the years of the late 1960s and throughout the 1970s. The machine produced high quality hard-copy computer output and could be driven at its optimum and relatively high data-rate of 134.5 baud. The Selectric conversion terminals were so popular that computer manufacturers even started supporting this 134.5 baud data rate instead of the more common 110 baud.

Detecting your terminal type

As the example of terminal text color has shown, not all terminals behave consistently. This led to the development of the terminfo database (formerly named termcap, for terminal capabilities) and the introduction of the "tput" command. On Linux, tput is available from the ncurses package and is the frontend of the terminfo database found in /usr/share/terminfo/. This database is a library of the vast number of terminals that can be found in the wild and curates their capabilities and escape code sequences used for a fixed set of common terminal operations. All these terminal commands are then exposed through a fixed vocabulary of commands, the terminfo API, and can be accessed through tput. For example, if you want to write your own program that does specific terminal operations, for example sending the cursor to the home position in the top left corner of the display, you don't have to do terminal detection on your own or find specific ANSI escape codes to do it for different terminals, but instead you use tput. tput will read the terminal type your're currently using from the $TERM environment variable, look-up the sequence for "go home" from the terminfo database and output it to STDOUT for you (thus "tput"). For example. Try$ echo $TERMto see what your terminal would tell tput about itself. Then try

$ tput home.

This terminal detection works locally, but it doesn't via Telnet or won't work in sessions with remote BBS. The $TERM variable isn't transmitted as part of some standardized "Telnet handshake". This shortcoming is in parts remedied by a Telnet command called TTYPE, which a proper Telnet implementation will answer with a string similar to the $TERM environment variable value. But as so often with Telnet and terminals, this is a moving target. For example, the well-known terminal hyperterm on Windows (and its built-in Telnet implementation) will identify itself as "ANSI" by default. Try feeding this to tput via the -T switch and tput will tell you that it doesn't know a terminal named "ANSI". Also, BBS type serial communication often happened in the early days of computing and connecting to a BBS usually meant that people using an IBM compatible PC would connect to "DOS BBS", while people with Ataris would call Boards for Atari owners or Commodore users would call Commodore BBS and so forth. With these closed circles it was quite safe to assume a specific set of capabilities on the other end of the (dial-up) line. Also, these connections usually didn't include a Telnet client in the communication chain (compare the notes about a terminal emulator not being Telnet in this document). Detecting the type of remote terminal mattered more in professional use scenarios or in today's renaissance of BBS where people might call a BBS via Telnet, via Dial-up, with a Windows PC, a Mac, from a Linux box or with their choice of beloved retro computer running an ancient version of some ancient terminal emulator. That's why many BBS in the olden days but also to this date do a Q&A session about the terminal in use with their visitors upon connect.

What is Telnet?

Telnet is an old protocol to transmit keystrokes from your keyboard to a remote system and receive on-screen feedback, text and simple graphics, on your local screen as if you would sit in front of the remote system on its console. Telnet is one of the first Internet standards and its name is commonly translated as being the abbreviation of "teletype network". Telnet is a simple protocol layer which may operate on a serial data link, like a RS232 serial line, where data Bytes are modulated as electrical signals and are transmitted with a certain speed (Baud rate) or Telnet may operate over more sophisticated network means like the 1970s / 1980s Network Control Protocol (NCP) or today's TCP/IP protocol stack, which has long replaced NCP.The Telnet endpoint of a remote system is usually reachable under the remote system's IP or domain address on port 23.

Note that Telnet and "a terminal" isn't the same thing. Telnet is an element or "layer" that is usually in-between a terminal or terminal emulator and a remote system. Telnet clients are capable of responding to so-called "interpret as command" (IAC) byte sequences and change their behavior accordingly. A terminal doesn't use or understand these IAC sequences. So Telnet extends what a terminal can do. As a gateway element in a communication data stream, Telnet then outputs a "serial ANSI X3.64 conforming" data stream to a connected terminal, or the terminal emulator it is running in, usually in "full screen" mode, and the terminal emulator will display this data.

Telnet is an unencrypted clear text protocol. Historically, it was assumed that the "line" you are communicating over is private by design, for example a dedicated phone line, twisted copper wire, an analog PSTN line or later a digital ISDN channel. These technologies didn't require an encrypted envelope. But when Telnet communication began to migrate towards the Internet and public IP networks, the need for encryption arose and Telnet was superseded by SSH (Secure Shell Protocol) which, in essence, is still Telnet but uses elaborate mechanisms for a safe encrypted handshake upon initial connection and then communicates via completely encrypted packets over the transport channel, usually on port 22.

Some BBSs offer Telnet on different ports than 23. This is mostly to make it a bit harder for abusive connects from bots and similar agents to probe such a well-known port or stems from the fact that ports on a system below 1024 require elevated user privileges to be opened and thus expose the process offering the service to greater threats.

What are Telnet commands?

Over Telnet, all data octets except 0xff are transmitted as is. There are three common numeral systems (or notations) to describe Byte values in computing: hexadecimal, decimal and octal. The hexadecimal byte "0xff", or "255" in decimal and "377" in octal notation is the highest value an 8-bit byte can represent and in Telnet, it is called the "IAC byte", IAC for "Interpret As Command". This byte signals that the next byte is a telnet command. The command to insert 0xff into the stream is 0xff, so 0xff must be escaped by doubling it when sending data over the telnet protocol.Note that "ANSI escape codes" are a different thing from "Telnet commands". Telnet commands communicate with the terminal itself while ANSI escape codes control the presentation of content within a terminal. Refer to the section on Terminals and ANSI escape codes for an explanation of the latter.

Telnet is described in RFC854 and this RFC also details how IAC work (on page 13 of the RFC): "All Telnet commands consist of at least a two byte sequence: the 'Interpret as Command' (IAC) escape character followed by the code for the command. The commands dealing with option negotiation are three byte sequences, the third byte being the code for the option referenced. This format was chosen so that a more comprehensive use of the "data space" is made, (...) collisions of data bytes with reserved command values will be minimized, all such collisions requiring the inconvenience, and inefficiency, of 'escaping' the data bytes into the stream. With the current set-up, only the IAC need be doubled to be sent as data, and the other 255 codes may be passed transparently."

The following are the defined TELNET commands. Note that these codes and code sequences have the indicated meaning only when immediately preceded by an IAC.

Command Decimal Code NAME CODE MEANING SE 240 End of subnegotiation parameters. NOP 241 No operation. Data Mark 242 The data stream portion of a Sync. This should always be accompanied by a TCP Urgent notification. Break 243 NVT character BRK. Interrupt Process 244 The function IP. Abort output 245 The function AO. Are You There 246 The function AYT. Erase character 247 The function EC. Erase Line 248 The function EL. Go ahead 249 The GA signal. SB 250 Indicates that what follows is subnegotiation of the indicated option. WILL (option code) 251 Indicates the desire to begin performing, or confirmation that you are now performing, the indicated option. WON'T (option code) 252 Indicates the refusal to perform, or continue performing, the indicated option. DO (option code) 253 Indicates the request that the other party perform, or confirmation that you are expecting the other party to perform, the indicated option. DON'T (option code) 254 Indicates the demand that the other party stop performing, or confirmation that you are no longer expecting the other party to perform, the indicated option. IAC 255 Data Byte 255.A Telnet client and server may negotiate options using telnet commands at any stage during the connection. There are well over 40 Telnet options that were specified in various RFCs over the years but in practice, only a dozen codes are commonly used:

Decimal Name of the option RFC defining the option CODE NAME RFC 0 Transmit Binary 856 1 Echo 857 3 Suppress Go Ahead 858 5 Status 859 6 Timing Mark 860 24 Terminal Type 1091 31 Window Size 1073 32 Terminal Speed 1079 33 Remote Flow Control 1372 34 Linemode 1184 36 Environment Variables 1408

Is there a secure Telnet?

So, Telnet is a "cleartext" protocol, without any means to encrypt its sent data. But hey, Telnet is only one layer of the communication channel - wouldn't it be possible to send Telnet over a secure channel of sorts? Well, yes, and there are in essence two ways to make Telnet a secure way of communicating:The most obvious way of securing a Telnet communication is by sending it over a channel that is per-se secure. A land-line dial-up line, where Telnet is used to establish a one-to-one channel inside a medium that is secured by telecommunication standards is secure Telnet. Or by using Telnet on a private network or via a virtual private network. That makes it secure as well. So, if you tunnel Telnet through an encrypted channel, Telnet can be secure - but remember that Telnet on its own isn't secure.

What is TelnetS?

Yes, you read that right, it's Telnet - with an "S" at the end, and it doesn't mean the plural of Telnet. There is a not so

well known "standard" for a secured version of Telnet, and its name is "TelnetS". We here wrote the last letter as a capital,

to differentiate it from the plural form of the word. Similarly as with the two versions of a "secure FTP", there's a name

variant for Telnet (FTPS is FTP over TLS/SSL, and SFTP a synonym for the SSH2 File Transfer Protocol, another example is

HTTP and HTTPS), and a suffixed "S" indicates that TelnetS is Telnet over TLS/SSL (Secure Socket Layer).

TelnetS is enabled by a modified telnet daemon, telnetd, which is available on Debian systems via the package telnetd-ssl.

The SSL telnetd daemon replaces normal telnetd and adds SSL authentication and encryption. It inter-operates with normal telnetd in

both directions. It checks if the other side is also talking SSL, if not it falls back to normal telnet protocol. If this hasn't

become clear yet: this means passwords and data sent will not go in cleartext over the line. It has been agreed that secure telnetd

listens for incoming connections on port 992. The bad news in regards to this "secure Telnet" is the fact that it is not well

supported and known. This might be due to the unfortunate naming or the alternative of SSH. Outside from IBM, where TelnetS was

suggested as a secure alternative for some systems, you won't really find it in the wild.

Note that a server talking TelnetS does also require your client to be TelnetS enabled. A telnet executable with SSL support

compiled in might provide the "-z" switch, where you can say you want SSL encryption ("-z SSL"). Such a telnet client can come in

handy to debug HTTPS connections when connecting to port 443 with it.

One other note is that TelnetS is not the same as using a command that is able to establish an arbitrary network connection, like

netcat or openssl. While you may use a command like "openssl s_client -host example.com -port 992" to connect to a SSL encrypted

endpoint, openssl won't be able to really "speak" Telnet. That's because TelnetS, just like Telnet, in parts uses special binary

escape sequences which openssl won't understand. These binary sequences are explained above in "What are Telnet commands?". In short,

telnet uses the IAC (interpret as command) byte (value 255, hex FF) to start a command sequence. If this byte is sent as data, then

it must be "escaped" by prepending it with another value 255/hex FF (that means on the wire: "IAC IAC", "255 255", "FF FF"). Not

"knowing" about this protocol convention and not obeying this standard means the data stream will become corrupted once the remote

Telnet server starts to negotiate protocol specific things.

What Terminal emulators can I use?

Note that some terminal emulators are just that, a terminal emulator. While other programs are communication suites, which are made of

a component to talk to modems via serial line or other connections, a phone book or address database to manage your collection of BBS

or Telnet servers to connect to, logging and tracing means to record sessions with remote Boards and finally a terminal emulator that

will handle the actual connection, sometimes with optional SSH support, and software extensions to handle in-band or out-of-band file

uploads or file downloads via popular binary data transfer protocols like KERMIT, YMODEM, XMODEM or ZMODEM and their variants.

At this point you may have understood that a terminal or terminal emulator is more like a "window", or "browser", it is how you "look" at

a data stream, but how this data stream arrives at your system is another story. Telnet is one way, or a directly connected socket another,

it may also be via a device that presents itself as a serial port. Terminals may have built in means to bring data to your "window" via a

dial-up line with integrated phonebook in a communication suite designed for modem communication, or it may be be brought in via a package

that has built-in Telnet, SSH, or all of those means. But a terminal on its own might also be very bare-bones - and this in turn might

suffice for serial connections, like common in talking to development boards, like Arduino or ESP8266. Examples of bare-bones "only a terminal"

applications are gtkterm and the very excellent for serial line debugging HTerm.

On Linux / *nix

On *nix systems there is a selection of terminal programs available, usually called "the console". xterm, rxvt, GNOME Terminal, and Konsole

These terminals and command consoles usually support at least a portion of ANSI escape code sequences to control your display, text

and cursor layout. Terminal emulators are typically used to interact with a Unix shell locally on a machine. To access a remote system

there needs to be a bridge in-between that communicates via network with this remote system. In early computing, this tool was the

unencrypted Telnet protocol, implemented in the similarly named telnet program executable. So in order to "dial" into a BBS, which

in essence is accessing a remote system via terminal emulator, you would use Telnet inside your terminal emulator to go online. the telnet

command will then act as a "full screen" application inside your terminal emulator. The telnet command is probably the first option anyone

would chose, but the very handy screen program can also be run in "telnet mode".

- Linux telnet command in your terminal of choice

- Linux "screen" in Telnet mode

Few people know that the popular screen terminal emulator and multiplexer is able to operate in "Telnet mode" when it was compiled with

the ENABLE_TELNET option defined (which is usually the case). Start screen like so: $ screen //telnet 127.0.0.1 23

to connect to

local host in telnet mode at port 23. You see, when the first option to screen is "//telnet", it expects a host and port as 2nd and 3rd parameter.

Refer to the screen man page for further help, but usage is generally straightforward.

For BBS SysOps it might be interesting to note that screen in Telnet mode will identify itself with the name "screen" in response to a TTYPE

request, unless instructed otherwise.

- PuTTY - stand-alone software implemented terminal emulator and telnet communication program.

- Qodem (under wine) - an excellent terminal and communication suite. It emulates a number of terminals faithfully, has an integrated phonebook

best in class features like reliable session capturing and renders fonts like few others. Even on Linux under wine it perform flawlessly and once

you get the 'all keyboard shortcuts' interface, it is a joy to use.

- Tera Term (under wine, see below) - despite some rough edges in terms of font configuration and color interpretation a solid terminal.

- HyperTerminal (under wine, see below)

- Netrunner

- SyncTERM

- kermit

- uxterm, rxvt, xterm, urxvt, dtterm - there are many terminals more on *nix systems

cu - very simple terminal with dial-in functionality ("cu" is short for "call up")

tip - very simple terminal from the early days to connect to remote systems, also with dial-in and socket connect functionality

On Windows / DOS

IBM PC clones had an 80×25 character display mode with 16 colors and special graphics characters. This was known as ANSI or more

precisely the IBM "OEM font" CP437 with support for ANSI color (escape codes, as interpreted by ANSI.SYS). Despite that, DOS

and Windows didn't really come with a default terminal client. That said, there are a number of good options available - although

the Windows-bundled Hyperterm isn't necessarily the best option.

PuTTy - mature cross-platform terminal. On PC, though, it is usually set to the wrong charset by default. So go into preferences,

and there into "Translation". Look for option "Remote character set" and change iso8859-1 to UTF-8 to have putty munge utf-8 encoded

cp437 block characters etc.

Hyperterminal

On DOS/ Windows up to roughly Windows XP Professional, the system came with a default terminal emulator called "hyperterminal"

or hypertrm.exe on the filesystem. It didn't ship with Windows 7/8/10. In order to run hyperterminal you only need two files,

a file called hypertrm.dll and the executable hypertrm.exe. You should commonly find hypertrm.exe in C:\Program Files\Windows NT

and hypertrm.dll in C:\Windows\System32. If you can't find it on your system or you are running a Linux box but would like to

use hyperterminal under wine (which works), then you can get hold of an "Installation" or "System Builder" CDROM and extract the

two files from there.

On the Windows XP CD, both of these files are in the i386 directory. Copy them over and while you're at it copying hypertrm.chm

and hypertrm.hlp with them wouldn't hurt. As you might notice, the file suffixes are not .exe or .dll but all files on the

installation medium end in a dash, "hypertrm.ex_" and "hypertrm.dl_". These files are archived, compressed so called .CAB (Cabinet)

files, an archive format used by Microsoft. It can be easily extracted/inflated with common archiving utilities, like the Ubuntu

"built-in" file-roller. On Windows all compression utilities should do the job as well, even the Explorer built-in one, if you

select both files, right-click on any of the highlighted files and click on "Extract".

Like any other terminal emulator out there, hyperterminal implements cursor and display behavior in response to terminal escape

sequences (ANSI escape codes), so character-mode full-screen applications (one example would be the lynx browser, or, obviously

telnet) can run in hyperterminal's terminal window. Telnet is built into HyperTerm.

If you don't just start it up but go into hyperterminal's settings, you can choose from a set of built-in terminal configurations

so hyperterminal emulates specific terminal types. And here come the problem: neither of them is implemented correctly. The one

named "VT100" works so la la but does not pass extended characters commonly used for basic graphics. The config called "ANSIW"

at least allows 8-bit ASCII mode and interprets ANSI color codes, but cp437 graphic chars end up garbled. Also most other things

like default line terminator etc. are wrong and need be changed.

Once you are connected to some Telnet system, go to "File" > "properties" to change settings for the current connection. On the pop-up

switch to the second tab for "Settings". "Emulation:" should be "ANSIW". "Telnet-" may say "ANSI" or "VT100", or whatever you had

set before - it's the string HyperTerminal sends in response to a TTYPE (code 24) ANSI control code request by a remote system.

Setting it to ANSI should be safe, so the other side doesn't expect xterm or even xterm-256colors capabilities but Hypertrm should

be able to receive ANSI color codes. Now click on the button on the lower right, "ASCII Setup". In the upper options for

"ASCII Sending" check both boxes, the checkbox for "send line ends with line feeds" and "Echo (typed characters locally)". In the

lower area for "ASCII Receiving" do not check "Append line feeds to incoming line ends" and do not check "Force incoming data to

7-bit ASCII", but you may check "break too long lines". If you see very strange glyphs instead of legible characters on display,

try opening "View" and there "Change font". Select a different font and the displayed text should be readable normally. Once you

have all those things in place, you should get half-baked terminal emulation with hypertrm.exe for basic Telnet, but expect newlines

still be messed up.

Contrary to common belief, HyperTerminal wasn't written by Microsoft but by Hilgraeve, a Detroit/Michigan company, for Microsoft

as a subcontractor. And the version shipped with Microsoft Windows is, well, let's say an "early version". The company continued

to improve the software on its own and continues to sell it on their website as HyperTerminal PE

(where "PE" is for "private edition") and their most up to date version, "HyperACCESS".

If you're adventurous and want to run the original Windows binary under Linux within wine emulator, you can do so. It works.

Just don't use the initial "connection pop-up" that comes up once you open the program, but close it and go to "Call" to trigger

a new connection pop-up. Otherwise wine will crash. Enter some name in the "New connection" pop-up line and click "OK". A next

pop-up comes up and there you can select "Connect via:" > "TCP/IP (Winsock)" from a drop down selector, instead of the pre-selected

serial port "COM1". Once you select "TCP/IP (Winsock)" you are offered different inputs for "Host address" and "Port number", the

latter pre-populated with Telnet's common port 23. Once you're connected, make sure to change settings for terminal emulation like

described above.

Tera Term

Tera Term (alternatively TeraTerm) is an open-source, free, software implemented, terminal emulator program from Japan. It emulates

different types of computer terminals, from DEC VT100 to DEC VT382 and can be run on DOS/Windows or under wine emulation on Linux.

It has its own Wikipedia page and can be downloaded

from ttssh2.osdn.jp or www.teraterm.org

as both domains point to the same server.

Setup can be a bit tricky. So once you've extracted the zip archive, locate the main binary ttermpro.exe. Don't try editing the

shipped TERATERM.INI file as it's difficult to understand. Instead use the GUI of the program itself - it does the same. So, upon

start, a "new connection" dialog comes up. Enter a server or IP for "Host" and "TCP port #" number. Select "Service": "Telnet" as

Tera Term starts expecting an SSH connection. Usually, the first terminal output after "Connect" should be all gibberish, as the

program is from Japan and the default settings expect Japanese ideograms. Go to "Setup" > "Font" > "Font..." and in the opened dialog

select some western font you like and click "OK" - text should now be legible, refreshed without a reconnect. Then go to "Setup" >

"Terminal..." and check "Local echo", and while you're on this dialog, enter "american" for "locale".

- Telix (often shipped with ELSA modems)

On Commodore 64

The Commodore 64 ("breadbin") has a native resolution of 40 by 25 characters. This comes from the hardware text mode of the

VIC-II video chip the C64 uses. The built-in character set uses 8 bytes (for 8×8 pixels) for each of the 256 characters. These

hardware capabilities mean that an 80 column mode (like on a VT100 ASCII terminal or an IBM PC-compatible emulating one) can

only be implemented in software, and on the C64 this means using "bitmap mode". In "bitmap mode" the C64 exhibits a native

resolution of 320 by 200 pixels. If one wants to display 80 chars per line in this mode, it means a screen budget of 4×8 pixels

per character. Given that there needs to be a one-pixel gap between characters to be legible, characters can effectively only

be 3 pixels wide.

- CCGMS - Terminal for the C64 with many extended and modded versions available

Handy Term - another C64 terminal and communication suite

- GGLabs Terminal - a VT100 terminal emulator, monochrome display, with a custom software 80 columns display and support for serial speeds up to 115200 bauds

- CGTerm - while technically not a Terminal on the C64, this terminal emulates how connecting to Commodore BBS would look on native hardware, only cross platform.

On Commodore AMIGA

The Amiga with its 320×200 @ 60Hz (NTSC) and 320×256 @ 50Hz (PAL) display resolution is usually capable of displaying 80 columns

wide terminal screens (like the C64). But as the Amiga is somewhere between the olden days and newer systems, some terminal

programs can be toggled between 80 and 40 column modes.

Most terminals are available from aminet. A video tutorial on how to "dial" from an Amiga to Telnet via

an USB telnet bridge is here.

- 64Door

- ColorTerm

- A-Talk III by Felsina Software in 1986, Published by OXXI

Amtelnet, DCTelnet, virterm, AmTelnet-II, Term

On Commodore PET

The very early hardware of the COmmodore PET makes connecting to a BBS a DIY adventure, but it is doable, as described

here.

- McTERM - released in 1980 by Madison Computer from Madison, WI, USA, manuals here.

- PETTERM - on github

- Terminal v11 (listing in Transactor Magazine)

On Atari ST/STE/MEGA/TT/Falcon

Note that with the Atari ST emulator "Hatari" it is possible to run a terminal program on an emulated Atari and "dial" into modern

BBS via Telnet.

- Connect 95 - released 1992 by Lars & Wolfgang Wander, Shareware

- FLASH - released 1986 by Joe Chiazzese & Alan page, VT100 emulation with ZMODEM integration

- TAZ - released 1994 by Neat n Nifty, able to do ANSI 16 Color

- ANSITerm - by Timothy Miller, released by Two World Software, only term able to display PC-like 16 color ANSI emulation with the help of a custom 3 pixel wide font and running in low resolution mode

- NeoCom - Shareware, ASCII/VT52/ANSI Emulation

- Freeze Dried Terminal (FzDT)

- BobTerm - 40 columns and only up to 300 baud

- VanTerm

On Apple / Macintosh

On Apple systems, terminals usually only look good after installing and using a font that has old-style block characters

as required by the CP437 charset. Look for a font named "Andale Mono", which has these chars. Also note that Apple terminals

tend to skew the CGA color scheme into a bleached realm. If you want to make sure that ANSI art looks as originally intended,

you can invest the time to configure your colors along the original CGA specification.

iTerm/iTerm2 - set a proper font like Andale Mono in "Preferences" > "Profiles", tab "Text".

Terminal.app - define a custom profile in "Preferences" > profile "Pro" and set "Andale Mono" as font; also set "use bright colors for bold text".

On MSX

- BadCat terminal (by Andres Ortiz, load via bdterm.bin, 80 cols mode via bdshell)

Connecting your modem

Here we'll discuss how to connect your modem to set things up. First, some notes on how to connect your modem to the phone line and then notes on how to connect

your modem to your computer, the different options with serial and USB and how to debug your terminal setup.

Connecting your modem to the phone line

The telephone line the modem is connected to must provide loop disconnect signaling (pulse dialing) or DTMF signaling (tone dialing). Tone dialing is by far the

most used method world wide and your phone line usually is of the tone dial type.

The modem is connected via standard modular telephone jack, type RJ11.

Within private branch exchange (PABX), dialing an external number may require the user to insert a PSTN access digit, e.g. a digit 9 (external access code).

PBX systems are known from larger office installations but are also common in private homes.

A telephone line uses direct current and alternating current for ring signals. The telephone line circuit (TNV) can have hazardous voltage and must not be touched.

On some modems, there are two RJ45 jacks (rectangular connectors, accepting usually clear plastic plugs), one connector for "PHONE" and another for "LINE". In case

your modem has only one connector, make sure you use the correct line cable between your modem and the wall outlet - there might be differences in how lines are

connected through.

In parts of Europe, Germany, Luxembourg an Liechtenstein, a wall connector named TAE-connector is used (in Austria, the similar shaped TDO-connector). There are

different plugs, with different contact plates for TAE connector type "F" (for telephones, "Fernsprechgerät" in German) and type "N" (for other devices such as

answering machines and modems, German "Nebengerät" or "Nebenstelle"). Some TAE outlets also feature a connector labeled "U", which is short for "universal device"

and is meant for both, fax and phones. Usually this is equivalent to a NFF TAE socket, where the "U"connector is on the right ("NFU") and similarly as with an NFF

socket the U-connector represents a separate, second F-line. On modern xDSL routers offering a legacy TAE socket, two separate telephone lines may be presented via

such a NFU socket and lines may be configurable via the router's administration interface.

Note that if your modem is plugged into a TAE wall outlet with a cable suitable for type "F" it will get

incoming ring signals, but it won't be able to switch to line and will not work! You must use a cable with an "N"-type wall connector and an RJ45 plug on the other

end to plug into your modem. Getting a RING signal on incoming calls on terminal does not mean the line is correctly connected! Also note that once you plug a TAE

"N" plug into the wall outlet you break the circuit for the "F" connector - so the other end RJ45 plug must be plugged into the "N" device (your modem) as well or

the phone connected to the "F"-connector won't work anymore.

Background is that the "N"-sockets on a TAE socket form a daisy-chain. Your provider's network service lines enter the socket and connect to pins 1 and 2 on the

right-most "N"-connector. From there, service is daisy-chained, leaves the first "N"-device via pins 5 and 6 on the connector and is wired inside the socket to pins 1

and 2 on the next "N"-connector to the left. If the next connector is a "F"-connector it is the last element in this daisy-chain, receiving service on pins 1 and 2.

That's why a dandling "N"-cable would break the chain.

With modems, there's another complication regarding this daisy-chain. As modems are commonly connected to the "N"-socket, they need to keep the chain closed in

inactive state, so the voice device can receive a signal. On older, standards conforming modems, this is usually realized with a relay. You can hear this relay click

any time such a modem "picks up" and goes "off hook", breaking the voice line while it's active. Newer modems, around the year 2000, often started to omit this relay

for cost. A "bridged cable" or "bridged connector" was bundled instead, where the modem connects to the service lines without the return lines, in a parallel circuit

with the voice device. This is against the TAE idea and creates a stub line which introduces crackling and noise into the line. In addition, you need to be aware of

the requirement for a "bridged cable" in case plugging your modem in breaks the signal to your voice device.

side __ plug, as seen from front

____| | _____ _____

_____/ \ |_ _| |_ _|

|_____3 \ 4 _| |_ 3 _| |_

|_____2 | |_ _| |_ _|

|_____1 | _| |_ 2 5 _| |_ 2

|_ | |_ _| |_ _|

| \ _| |_ 1 6 _| |_ 1

| | |_ _| |_ _|

|______ | === ===

|_| F N

Pin Name Used for

1 La Exchange line a

2 Lb Exchange line b

3 W external bell (obsolete since mid-1990s)

4 E ground connection, (request an external connection in

very old telephone installations)

5 b2 Line b, looped through the telecommunication device

6 a2 Line a, looped through the telecommunication device

In order to establish a connection with another modem you must know whether your modem is connected to a main line or to an extension in a private telephone system

(private automatic branch exchange, PABX or PBX). Private telephone exchanges use different methods of getting a dial tone. Some require users to press the "Flash"

key or you need to dial an "escape digit" (e.g. 0 or 9) to get a dial tone. You must also know if your phone company is using pulse or tone dialing. This can be

determined by listening to the dialing sound. If you hear a rattling sound after each dialed number (and usually telephones using pulse dial feature a circular dial),

you have pulse dialing. If you hear the touch-tone beeps when dialing, then you have tone dialing. Pulse dialing is very rare these days.

You can manually tell your modem to dial. If you're on a main line, and want to dial the example number 123456, enter

ATDP123456 for pulse dialing, and

ATDT123456 for tone dialing.

You probably figured out the format already. That's the opening "AT" command (mnemonic "attention!"), followed by "DT" for "dial, tone"), followed by a number. This

command now is varied for extension lines with a "W" for "wait for dial tone":

ATDT0 W 123456

For clarity, we here inserted spaces between "ATDT0" ("dial zero") and "W" ("wait"), followed by a number. It doesn't matter where you put spaces:

AT DT &W 123456 replaces the zero "0" with an ampersand "&" to trigger a simulated "Flash key" to get a dial tone.

When dialing to an extension, or when you have issues getting a dial-tone with a line connected to a PBX system, it may be needed to tell the modem to "blind dial"

without waiting for a dial-tone first. A PBX system might not provide a dial-tone although the line is already prepared to "go out". So, if you don't hear a dial tone

after the Flash button or escape digit, or in any case where you have issues getting a dial-tone, you can enable "blind dialing" via the command "ATX3" (see the section

on Hayes commands for the details).

ATX3DT123

Here we see multiple commands after the "AT", the "X3" for blind dialing, followed by "DT" for "dial, tone" and the number of a local branch of a PBX.

Connecting your modem to the computer

As we have learned in "What is Baud?", with a modem and using it on a computer, there are two connection lines involved in using a modem: the serial connection

from your computer (and its serial port) to the modem (computer-to-modem) and the connection between the modem and some remote modem (modem-to-modem). The first

connection, the local computer-to-modem connection is usually a RS232 serial connection. RS-232 is a standard for serial communication transmission of data and dates

back to 1960. When people define things involved in a RS-232 serial connection, they sometimes speak of the DTE device to mean the terminal (or computer), and

the DCE device, your modem. One mnemonic to tell them apart is that "DTE" contains a "T" like in "a VT100 Terminal".

That said, things moved on since the 1990s and there are USB modems, which means the common serial interface of a modem has been replaced or adapted internally

with a built-in USB (Universal Serial Bus) interface on such devices, for easier connection to computers not having a COM port (anymore).

Is RS-232 the same as RS-232 C?

So you turned your modem around and on the rear DB9 or DB25 connector there's a label stating that it is a RS232C serial port (and it's usually a "female" connector

with sockets, not pins). So where's the difference between RS232C and RS232? The answer is, there's no real difference, the "C" just denominates the revision of

the RS232 standard that is the basis for this connector. As RS232 dates back to the 1960, the standard has seen various revisions, from RS-232-A, to RS-232-B

and then RS-232-C. The most important thing that changed across these revisions is the line voltage. The original RS232 operated on 25 Volts, later revisions

also accepted 12 Volts and then even 5 Volts. But devices communicating in RS-232 C still need to be tolerant to signals of 25 Volts - although they may accept lower

Voltages. That means that serial adapters commonly used to connect developer boards like the Arduino or ESP8266 can't be used. These usually operate on what is

commonly said to be "TTL levels" ("logic levels"), meaning they operate on Voltages below 5 Volts or 3.3 Volts, and very often aren't protected against higher Voltages

and might burn on a RS232 connection. They would need what is called a "level-shifter" to communicate via RS-232. In turn, devices advertising to use RS-232-C often

just use the Voltage the device as a whole operates on, like 9V, the voltage available to the line driver circuit. But some RS-232 driver chips have inbuilt circuitry to

produce a different voltage just for the serial line. For the actual data transmission, voltage levels aren't really important, as long as the receiving hardware

is able to discern the difference between mark and space signals above the noise floor on the wire.

On computers the RS232 interface is usually called the COM port (for "communication") and named COM1, COM2,

etc. As RS-232 specifications are formally being harmonized with the CCITT standard ITU-T/CCITT V.24, it is also often called "V.24". On old Hayes modems the RS232

connector was labeled "DTE interface".

Serial connections are usually done with cables having a DB-9 or DB-25 connector. A computer, the DTE device, normally features a "male" connector (with pins). DCE

devices, like modems, usually come with "female" connectors, having sockets. Some modems, especially later and smaller models, used a circular 9-pin connector to save

space. But connectors in general may vary. Even the gender on DB-9 connectors may vary. You can use a Voltmeter to confirm which side of the communication connection

you are looking at: measure pin 3 and 5 of a DB-9 connector. Getting a voltage of -3V to -15V means the connector is on a DTE device. Getting such a voltage on pin 2

means you're looking at a DCE device.

A DB-25 connector with 25 pins is actually the "original" serial connector. It features additional connectors for additional "metadata" about the data connection,

for example on pins 15, 17 and 24 a clock signal is conveyed, which is used only for synchronous communications. One important difference is that Signal Ground is on pin

7 on DB-25 connectors and on DB-9 connectors it is on pin 5.

What is a simple Straight-Through serial cable, and what a Null-Modem cable?

A straight-through serial cable (sometimes called a "one to one" cable) is usually used between your computer and your modem. Straight-Through is the cable type

that by definition is used to connect a DTE device (PC) to a DCE device (a modem or some other communications device). The name refers to how the wires in such a

cable are connected: on a straight-through cable the transmit and receive lines are not cross-connected, but, you already guessed it, "straight through". If you

look at the schematic of such a connection, you'll notice that it is "mirror symmetrical". A straight through cable is just an extension of the connector on a device,

the cable connector on the other end of the cable has the same pinout as the connector on the device.

____ ___

.` \ / ` .

` (1) | ,---------Ground-+-(5) `

| (6) | | | (9) | DB9 Connector

| (2)-+--RX----------------, | (4) |

| (7) | | | | (8) |

| (3)-+--TX------------------------TX-+-(3) |

| (8) | | | | (7) |

| (4) | | `------RX-+-(2) |

| (9) | | | (6) |

`. (5)-+--Ground-----` | (1) ,`

` .____/ \___. `

A Null Modem cable (sometimes called a "crossover" cable) is used to connect two DTE devices (two computers, for example) together. As such it is commonly found

in early home computer multi-player applications, where two computers, like Amigas or so, could be connected to talk to each other. A Null modem cable does what normally,

in a proper serial communication situation, would happen: it connects the Transmit ("TX", or "TxD") pin of one device to the Receive ("RX", or "RxD") pin of another

device, so that signals sent from one device reach the other as incoming, received signal, and vice versa. It is also the cabling needed when things like a USB serial

adapter are used to talk to a development board, like an Arduino or ESP8266. It's the standard scenario when devices actually talk to each other. A straight-through

cable is just an extension cord use to "relocate" a connector on a device somewhere else.

____ ___

,` \ / ` ,

, (1) | ,---------Ground-+-(5) `

| (6) | | | (9) | DB9 Connector

| (2)-+--RX-----------------, | (4) |

| (7) | | | | (8) |

| (3)-+--TX--------------, `------TX-+-(3) |

| (8) | | | | (7) |

| (4) | | `---------RX-+-(2) |

| (9) | | | (6) |

`. (5)-+--Ground-----` | (1) .`

`-.____/ \____. -`

As a straight-through cable is just an "extension cord", you can attach a Null modem cable to a straight-through cable to turn the whole cable into a null

modem cable with crossed lines.

And in contrast to a Null Modem cable, serial cables shipped with common analog telephone modems of the 1990s were usually of the "straight-through" cable type.

A little more involved is the more complete wiring of a Null Modem cable with handshake. Nearly all pins of a DB-9 connector are connected through here. That's

because Hardware Handshaking between devices is done via additional pins that need to be connected through. The "Request to Send" (RTS) pin of one device is then connected

to the "Clear to Send" (CTS) pin of the other device, and the "Data Set Ready" (DSR) pin is connected to the "Data Terminal Ready" (DTR) pin of the other connector. But

note that the below wiring is just one of many control pin wiring variations found in the wild.

____ ___

,` \ / ` ,

, (1) | ,---------Ground-+-(5) `

| (6)-----+---DSR--------)---------, | (9) | DB9 Connector

| (2)-+--RX----------)------, `-DTR--+-(4) |

| (7)-----+---RTS--------)------)----CTS--+------(8) |

| (3)-+--TX----------)----, `-----TX-+-(3) |

| (8)-----+---CTS--------)----)------RTS--+------(7) |

| (4)-+-------, ) `--------RX-+-(2) |

| (9) | `------)----------------+------(6) |

`. (5)-+--Ground-----` | (1) .`

`-.____/ \____. -`

Troubleshooting a serial connection

The first thing to make sure is that your terminal application actually works. That means, confirm it can send data to your serial port. With modern systems,

a serial adapter (or serial converter) is usually attached via USB. Internal serial cards are rare and motherboards with on-board UART interfaces are usually

not used in an average desktop PC. So it's usually an USB dongle of sorts.

If you're on Linux, watch syslog once you connect the device (tail -f /var/log/syslog) and observe if it gets properly recognized and activated. lsusb is also

a handy command to see if your serial adapter shows up. Then head over to the /dev directory and find your USB device among the TTY devices. With these small

USB devices, the serial adapter is usually /dev/ttyUSB0. Note that when you do the directory listing of /dev, ls will probably displays the adapter as owned by

root and as a member of the "dialout" group. Your current user usually isn't usually in group "dialout" and from that you will not have permissions to access

this serial adapter. To remedy that, either add yourself to "dialout" or just change ownership of the plugged adapter:

$ sudo chown root: /dev/ttyUSB0

Once you did that and commands executed as your user have access to the adapter, you now need to confirm serial data is actually sent, with the current

configuration, hardware setup and with your terminal of choice. It may happen that everything seems to be configured correctly and as if your terminal would be

sending data while actually the terminal is the reason things don't get sent. So remove the terminal from the equation and set up serial in the most reduced way.

For that, you can safely connect the Tx and Rx pins of the actual hardware connector, to send (or echo) data immediately back in the shortest loop possible. Check

if this works by opening two command shells (terminals) side by side. In one shell fire up a simple read command, and in the other write to the socket via echo.

On *nix systems everything is a file, and you can write to the serial TTY device socket just as you would to a normal file-system file.

$ cat /dev/ttyUSB0

and

$ /bin/echo -n -e "AT\r\n" > /dev/ttyUSB0

The result must be that you see the above "AT" string round-tripping and coming back as echo. Send it once, get it once. Unplug the wire-shortcut and confirm that

the "AT" now does not arrive in the shell where your cat command is reading the socket. In this example we used "AT" as this is the most basic Hayes modem command,

and in case you already have a modem connected and your connection works, you should see your "AT" echoed by the modem and acknowledged with "OK". Once that works,

you can continue and use a more elaborate terminal application but only when you're able to repeat this simple procedure, your terminal is actually working and able

to write to the socket. This bare bones test can be a first step when everything else fails.

When connecting older modems to modern computers, you quite probably use some cheap serial adapter. If things aren't working, it might be that your adapter isn't

able to discern the voltage changes which form the signals on the serial bus connection. Many serial adapters are designed for use with developer boards and expect

to work with low TTL voltages of up to 5 volts. Modems usually use RS-232 connections and they may operate at higher voltages. So either your adapter will get fried

by RS232 voltage levels or it may not be fit to read the serial signals on the wire. Many modems run on 9Volts and quite often the serial interface uses the same

voltage. While some TTL serial adapters may tolerate 9 volts, it's better to get a proper RS-232 serial adapter.

As said above, you can measure a serial connection with a Voltmeter and see common voltages on the signaling pins - which is useful to determine the voltage

level a serial communication operates on and to determine if you are looking at the DTE or DCE side of a connection. DTEs normally feature a male connector,

while DCEs usually feature a female connector. As this might not always be the case, we here measure. The aim is to find out on which line the voltage is more

negative, on Tx or on Rx.

On a DB-9 connector: Connect the black multi-meter lead ("minus") to pin 5, which is signal ground. Then connect the read wire ("plus"). Connect red to pin 3 and

see if you get a voltage more negative than -3V. In this case, it's a DTE device. If not, measure pin 2 - if you get this lower than -3V voltage there, it's a

DCE device.

For a DB-25 connector: Connect the black multi-meter lead ("minus") to pin 7, which is signal ground. Then connect the read wire ("plus"). Connect red to to pin 2

see if you get a voltage more negative than -3V. In this case, it's a DTE device. If not, measure pin 2 - if you get this lower than -3V voltage there, it's a

DCE device.

Some modems may not "answer" on the local computer-to-modem serial connection without a hardware handshake first. Read more on what "hardware handshaking" is below,

but for here it is only important to understand that this means additional pins on the serial wire need to be connected through on the wire and additional pins on the

computer's (or adapter's) connector need to be controlled by the computer. While serial cables shipped with modems are usually fully wired, the control of all the

pins on a DB-9 DTE connector may be incomplete. It depends on the driver controlling the serial port and it also depends on the serial adapter implementation if the

terminal is able to properly control turning specific pins ON and OFF. The terminal must be ale to send the RTS and DTR signals. That said, in reality this should be

a rare issue and your local serial connection to your modem should work without hardware handshaking.

If you connected your modem and either no "AT" commands are recognized or all you get back is garbled chars, then try setting a different Baud rate on the port

talking to your modem. The DTE rate of your communications program must be set to a value within the speed range recognized by your modem. Usually modems support

speed detection on the local connection. If something doesn't look right, restart the modem and your serial port and set a low bit rate first. In day to day operation

though, modems usually try to match the local DTE rate to the outgoing DCE rate - but they usually support higher bit rates on the local connection and speed rates on

the local and outgoing connections may be set individually.

What is the speed of the serial connection between Computer and Modem?

As described above, the serial line between the computer and your modem is a little different from the line (usually a phone line) between your modem and

the outer world. Your modem is connected to your computer on an appropriate asynchronous communication interface (or "COM port") of your PC. Most modems

usually harmonize the serial communication between computer and modem to match the speed on the other side, between this modem and the remote modem but

the local data line may operate at higher data rates than the modem-to-modem connection and the local serial line usually doesn't use elaborate compression

schemes. Speed on the local serial line depends on the capabilities of the computer's COM port. One central component of the COM port is the UART chip ("UART"

is short for "Universal asynchronous receiver-transmitter". This chip manages the actual handling of getting signal-encoded bits on the wire. For modem

operation during the 1990s, modem manufacturer usually recommended the COM port to use at least an UART chip of type 16550. Earlier UART chips didn't have

an on-chip buffer and required the host system to pay attention to the serial communication more frequently ("IRQ interrupts"). This usually became an issue

with data rates above 9600 bps on systems of the time. The popular and eponymous National Semiconductor 16550 UART chip had a 16-byte FIFO ("first in, first

out") buffer and thus could handle chunks of serial data on its own, offloading work from the host system. Without a buffering UART chip, the serial connection

couldn't operate error-free or without data loss at higher interface data rates. With a buffering UART, communication with modems of the era could usually be

established at up to 115200 Baud.

Most modems support autobaud on the DTE to DCE computer-to-modem connection. That means the modem will automatically detect the speed the connected

computer is trying to communicate with. Autobaud is a process where the receiving device tries to determine the employed frame length of the transmitter, deducting

the used Baud rate. Autobaud is made simpler for the modem by the Hayes command set. Hayes commands all start with the "attention" string "AT" and by clever coincidence

the ASCII letters "A" and "T" have a simple binary form where a binary "1" is at the beginning and the end, effectively marking the start and end of a data frame.

These boundaries are easy to measure and the receiving device can adjust speed from this measurement.

Handshaking (Flow Control)

Devices communicating use some form of telling each other when they are ready and how they'd like to communicate. This is usually called "Handshaking". With

serial communication, "handshaking" is also called "Flow Control". With serial communication, the main purpose of handshaking is to tell the other side when the

receiving end is ready to receive data or when the sending side should stop sending and wait for the local receiver to process received data. This way the

interfaces prevent data from being dropped due to overloading of one end. Handshaking may happen in hardware, in software or as a combination, in both.

Software flow-control uses special codes, transmitted in-band, over the primary communications channel. These codes are generally called XOFF and XON (from

"transmit off" and "transmit on", respectively). Thus, "software flow control" is sometimes called "XON/XOFF flow control". To control data flow, the receiver

sends special control characters to tell the transmitter to pause sending. XON, for example, is an unprintable ASCII character ("Device Control 1", "DC1")

and usually not used for text, but it is decimal 17 and hexadecimal 11 and may be used in a binary transmission and lead to data corruption when not handled

properly.

Hardware handshaking or hardware flow-control uses dedicated out-of-band signals, means it uses actual separate hardware data lines on

the RS-232 connection separate from the TX/RX lines.

On pin 4, labeled as "Request to Send" ("RTS"), the transmitter sends a signal to the receiver. On pin 5, labeled "Clear to Send" ("CTS"), the receiver sends a

signal to the transmitter, indicating "yes, ready". Normally, these pins are "on" throughout a communication session. "On" means this pin is set to (logic) "High".

In some terminal applications, you can toggle these pin states of your COM port / serial interface manually for debugging purposes. On Linux, this is done through the

ioctl() system calls.

A typical handshake looks like this: the DTE (your PC, for example) likes to send data and asks for permission by setting its RTS output to high. No data will

be sent until the DCE (for example, your modem) signals back that it grants permission by setting the CTS line high. This may change during a session whenever

this "ready state" changes on one end, for example to process data or a buffer is full. A more general signaling mechanism is exchanged on the "DTR" ("Data Terminal

Ready") and "DSR" pins ("Data Signal Ready") where connected devices signal general readiness. DSR and DTR are usually in one state, high or low, for a whole connection

session. Specifically reserved for modems are pins 1 and 9. Pin 1 is "DCD" ("Data Carrier Ready") and is high whenever a modem has established a connection with

remote equipment. Pin 9 is "RI" ("Ring Indicator") and the equivalent of the bell ringing on a telephone - it is set to high when a call comes in. Especially

older modems have LED indicators on their front-panels indicating the status of these pins, of these "flags" of the ready-state mechanisms.

What about USB Modems?

Around the year 2000, manufacturers went from traditional RS-232 serial connected devices to modems with an in-built USB interface. This is more convenient not only

due to the USB bus being easier to handle and operate but it also allowed to power modems via the bus. This way the additional cord to a wall-wart power supply became

obsolete and cable wrangling got easier.

On Linux and in /var/log/syslog, USB analog modems may show up as TTY device /dev/ttyACM0 and the system will run them via the cdc_acm USB ACM device interface driver,

the "USB Abstract Control Model driver for USB modems and ISDN adapters". Permission issues, group membership, etc. on the TTY device are the same as with a serial

connected device, though.

Is it possible to connect two modems "back to back"?

It is possible to connect two modems locally, "back to back", meaning with a cable that directly connects the telephone line connectors on each modem, so you have

a local link, without a "round trip" over your telephone company. The difference to the connection provided by your phone company is that this wire between your modems

has no base voltage applied. This is called a "dry line". If this works depends on your modem. If it doesn't, you can attach a simple supply voltage to the line and

simulate a proper telephone line. Another option is to use a local PBX system where you call extension numbers locally. In summary, it's a bit of a DIY project, but it

is doable.

There are also commercial products to connect telephones or modems without a real telephone line, for local debugging or testing. Popular solutions are the Viking DLE-200B,

product description here, the Teltone TLS-4 Phone Line Simulator and the Chesilvale

TSLS and TSLS2 Dual Standard Telephone Switching and Line Exchange Simulator.

Another scenario for two modems directly connected is when a "leased line" is used. A leased line, your own "copper pair", is a private telecommunications circuit between

two or more locations and is either provided by a telephone company or privately operated. Such private circuits or data lines sometimes resemble a local "dry line" and

vendors sell special modems to establish data link connections on such infrastructure. Some home user modems are still switchable between modes for use on a line with

voltage on it and a mode for "dry lines". Modems for private circuits are still widely used and their communication standards developed far beyond the point where common

analog modems for home use stagnated around the year 2000. Modern leased line modems are comparable with VDSL or ADSL modems used as broadband terminators in private homes.

Any good telephone numbers I can call? To test my modem?

You can call the US National Institute of Standards and Technology (NIST) and US Naval Observatory (USNO). They provide time services, with accurate data from their

atomic clocks, which can be polled via

modem at 9600bps:

NIST:

+1 (303) 494-4774 (Boulder, CO)

+1 (808) 335-4721 (Hawaii)

USNO:

+1 (202) 762-1594 (Washington, DC)

+1 (719) 567-6743 (Colorado Springs, CO)

Also, the Telnet BBS Guide can be filtered on BBS with dial-up nodes available.